Despite major digital and AI investments, many organizations still operate in silos that slow down end-to-end decision-making. WCI breaks these silos by creating a unified, composable model of the entire value chain and optimizing it holistically.

As our customer - SCG Chemicals, one of ASEAN’s largest petrochemical companies, puts it:

“WCI creates a unified knowledge layer that enables semantic intelligence, allowing AI to understand relationships and context across the entire value chain for optimized decision-making and end-to-end supply chain efficiency.”

Although quantum advantages are provable for certain optimization problems, turning them into real business value remains challenging. Most current efforts focus on replacing classical MILP or QUBO solvers with quantum ones, but this approach faces inherent limitations: classical solvers are already extremely mature and most mathematical models only approximate reality. So any marginal improvement can easily disappear once deployed in real operations.

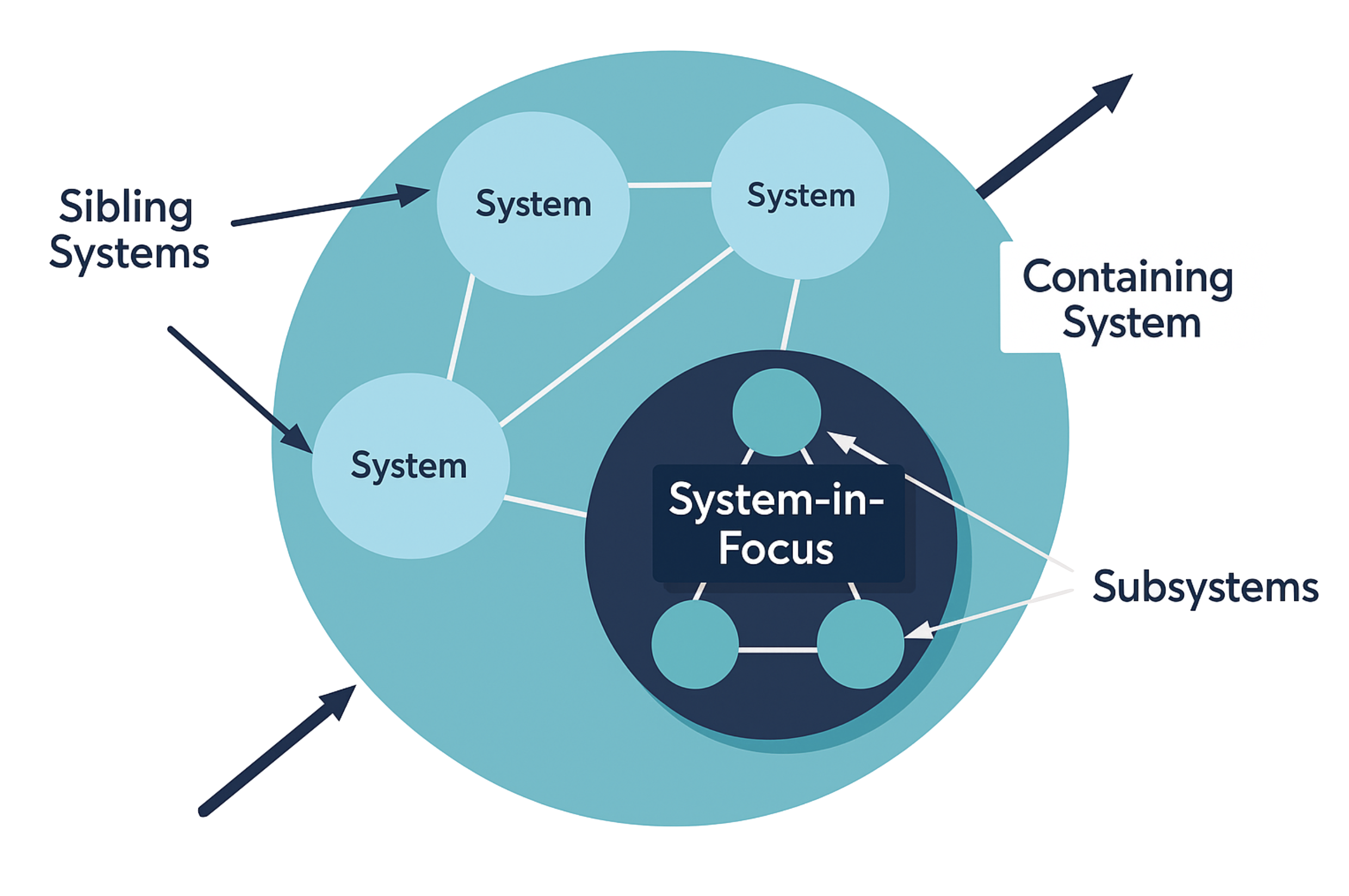

From our customer work, we have learned that quantum advantage is unlikely to emerge within a single business or operating unit. The real opportunity lies in coordinating multiple operating units, where classical software struggles. Traditional tools lack a mathematically rigorous way to model compositional, system-of-systems interactions, and joint end-to-end optimization rapidly becomes intractable.

Our approach therefore focuses on two layers:

1. A compositional modeling framework using simple intuitive diagrams, grounded in category theory, specifically Ologs and Polynomial Functors.

2. Mapping structural components of these models to problem classes where quantum algorithms provide known advantages.

We are not building a quantum solver. We are building the modeling layer that makes quantum advantage practical and continuously refined through real customer feedback. Publications detailing this framework will be released soon.

WCI does not replace your MILP, QUBO, or other classical solvers—instead, it complements them by addressing two common limitations.

First, classical solvers typically require complex mathematical modeling that most domain experts find difficult to understand or maintain. Second, these solvers do not naturally capture the system-of-systems structures that arise in complex multi-agent networks.

WCI tackles these challenges by replacing manual mathematical modeling with composable diagrams. These diagrams can be assembled into larger models or partially modified without disrupting the entire network. Their interoperability is guaranteed by category theory, a branch of mathematics that studies the structure of mathematical systems themselves.

Once defined, WCI automatically compiles these diagrams into precise optimization models suitable for either classical or quantum execution.

Under the hood, WCI is designed for hierarchical and multi-level optimization, where a parent system sets goals or parameters for interacting subsystems—each with its own local optimizer and constraints. These subsystems may expose only limited internal details, or remain entirely opaque, while still participating coherently in the global optimization.

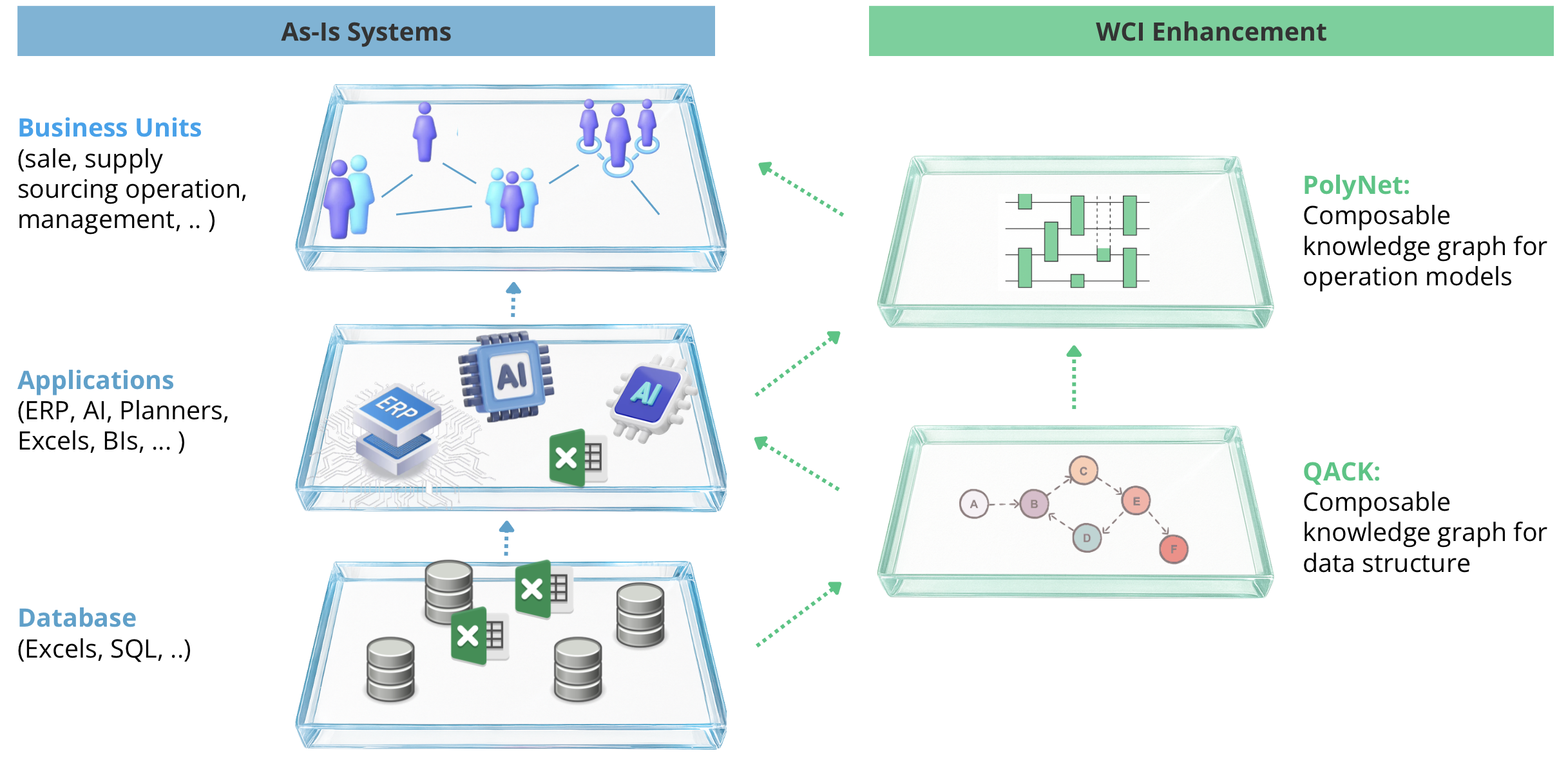

WCI makes databases, human agents and AI agents interoperable without replacing your existing digital infrastructure. At a high level, it consists of two application layers: Qack and PolyNet.

Qack is a web service that generates and manages databases with schemas designed for semantic interoperability. Users specify data semantics by drawing simple circles and arrows, called Onthology Logs or “Ologs”. Qack then compiles these Olog diagrams into database schemas and automatically deploys a PostgreSQL instance with a corresponding Swagger UI, typically in a single action.

PolyNet is a web service for expressing business logic that governs how Olog data changes and interacts. This is where optimization occurs. PolyFunctor may delegate computations to external classical or quantum solvers via Restful API and then writes the resulting values back into the Olog structure.

Because all data ultimately resides in a standard PostgreSQL database, any UI or application layer can be built on top of QACK and PolyFunctor, using familiar tools and workflows.